Learn how to measure the quality of AI translation services with key metrics and evaluation techniques for accurate and reliable translations.

What is linguistic quality assurance? (LQA)

LQA is the process of evaluating the quality of translated texts according to certain criteria. These criteria are essentially based on some of the following three quality metrics:

- Adequacy (Accuracy, Fidelity): Measures how well the original text’s meaning is conveyed to the translated text.

- Comprehensibility (Intelligibility): Reflects how well a reader can understand the translated text without access to the original text.

- Fluency (Grammaticality): Describes the target language’s grammar in the translated text.

Human Judgement: What is subjective LQA?

Although the criteria determining the quality of translation are certain, the scores given in line with these criteria will vary in line with the experience of the translators evaluating the translation. Suppose a translator is evaluating a particular text. You may see changes in the scoring of translation quality even when the same translator evaluates the same text at a different time. That is exactly why the manual human judgment process is a subjective way of LQA.

On the other hand, the proofreading capacity of translators is limited to about 10 thousand words per day. As a SaaS company, If you have a lot of content, you will see that the time and budget you need to devote to this process is increasing.

To make more objective evaluations, you can choose a different language service provider (LSP) to perform LQA. However, keep in mind that you will still have difficulty organizing the LQA outputs and your costs will increase at the same rate.

Automated LQA: What is objective LQA?

Manual translation quality assurance has some disadvantages such as being time-consuming, expensive, not tunable, not reproducible, and not scalable. However, this does not mean that texts translated by human translators cannot be used as a reference during the evaluation of translation quality. Almost all algorithms use references from human translators in their quality assessment.

The main advantage of automated LQA is the objective evaluation. These techniques are faster, cheaper, reproducible, and scalable. Whenever you test your translations, you will get the same scores for the same content. It gives you a clear and objective path for improving your translations.

How to measure the translation quality of MT systems or LLMs automatically?

A SaaS company needs to be able to measure the translation quality of AI translation tools and periodically make these measurements in the process of translation quality management. There are many AI translation service providers in the market, and each is constantly being developed by different software development teams using different datasets. This means that there may be constant changes in translation quality.

You can try to manually measure the change in translation quality. However, determining the methods to be used when measuring requires expertise. You are very likely to make a subjective and erroneous assessment. In addition, technology is developing so fast that even when you reach any conclusion, this result may lose its currency.

For all these reasons, it is necessary to use an automatic, objective, and repeatable evaluation method or to choose the tools that can periodically make these evaluations for you.

Automated Pre-Translation LQA

It is very difficult to measure quality comprehensively during translation. The text you are trying to translate may not be large and distinctive enough to understand the quality of the translation. In addition, since it is necessary to make a comparison by seeing alternative translations, the translation process must be carried out with all AI translation service providers. This will cause your translation speed to decrease significantly in general. Not to mention the cost of managing technical integration.

To prevent such problems, you can measure the translation quality of an AI translation system before starting translation. If you have a special data set called bilingual corpus, consisting of sentences of human translators, prepared according to many language pairs and sectors, you can send these sentences to AI translation services. Take the results and compare them with the sentences of human translators.

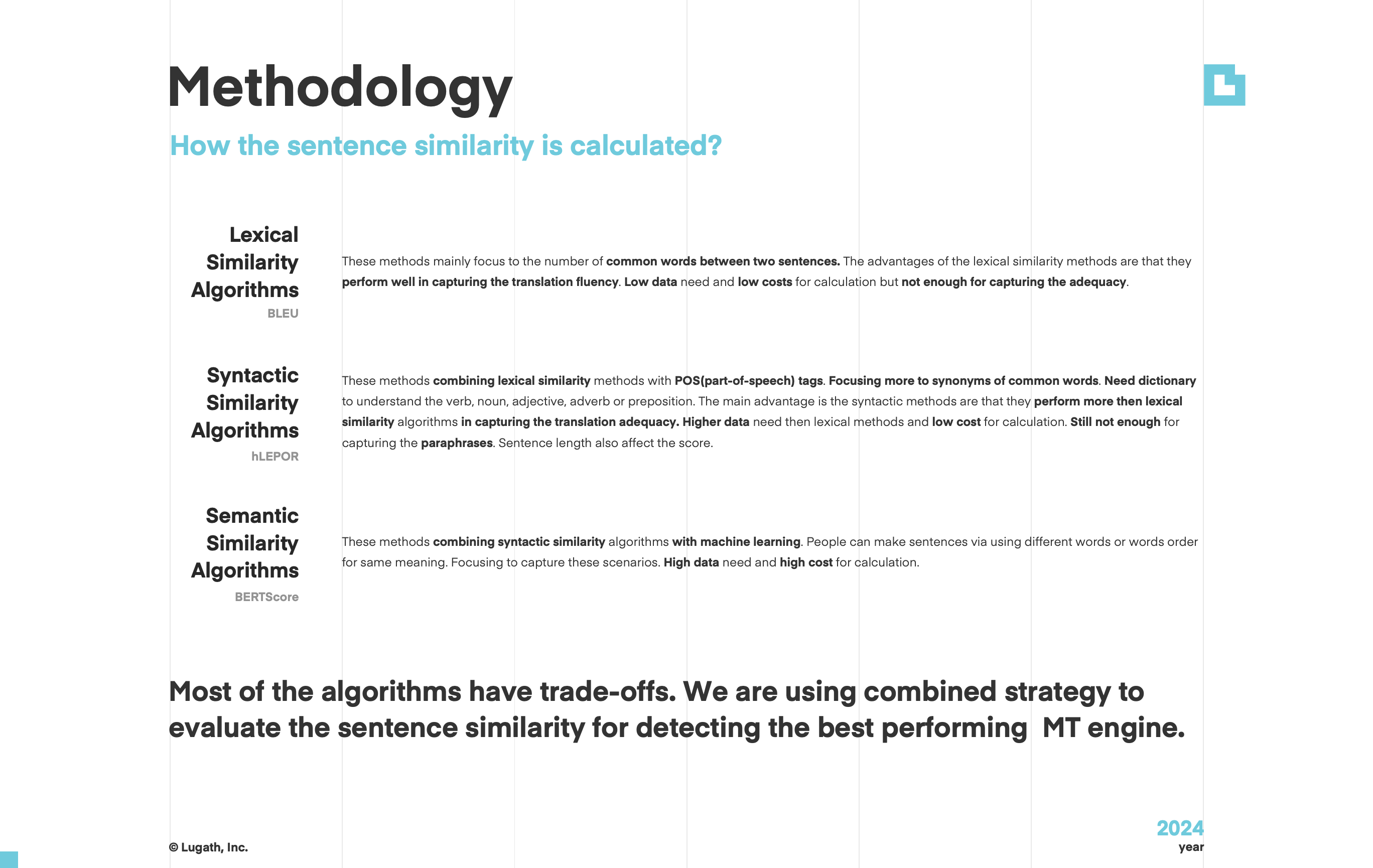

In the computer science literature, it is known as the sentence similarity problem and similarity measurement techniques can be collected under 3 different groups. In terms of LQA, the goal is to determine how similar the translations received from these systems are to the translations of human translators.

1. Lexical Similarity Algorithms

These methods mainly focus on the number of common words between two sentences. The advantage of the lexical similarity methods is that they perform well in capturing translation fluency. Low data need and low costs for calculation but not enough for capturing the adequacy.

2. Syntactic Similarity Algorithms

These methods combine lexical similarity methods with POS(part-of-speech) tags. The algorithms use POS tag patterns in general. Focus more on synonyms of common words and need a dictionary to understand the verb, noun, adjective, adverb, or preposition. The main advantage is that they perform more than lexical similarity algorithms in capturing translation adequacy. Higher data is needed than lexical methods and low cost for calculation. Still not enough for capturing the paraphrases. Sentence length also affects the score.

3. Semantic Similarity Algorithms

These methods combine syntactic similarity algorithms with machine learning. People can make sentences by using different words for the same meaning. Focusing on capturing these scenarios. High data need and high cost for calculation.

Automated Post-Translation LQA

Automated post-translation LQA utilizes software tools to ensure the quality of translated content by focusing on grammar, punctuation, spelling, style, terminology, and consistency. These tools, such as Xbench, Verifika, Linguistic Tool Box, LexiQA, and QA Distiller, identify issues like untranslated segments, partial translations, inconsistencies, and formatting errors, enhancing efficiency and accuracy while reducing human error. Typically used alongside human review, automated LQA combines the speed of automation with the nuanced understanding of human linguists, benefiting language service providers (LSPs) and translators.

To learn more, you can visit the Automated Post-Translation LQA: How can human translation errors be detected automatically? article.

Summary

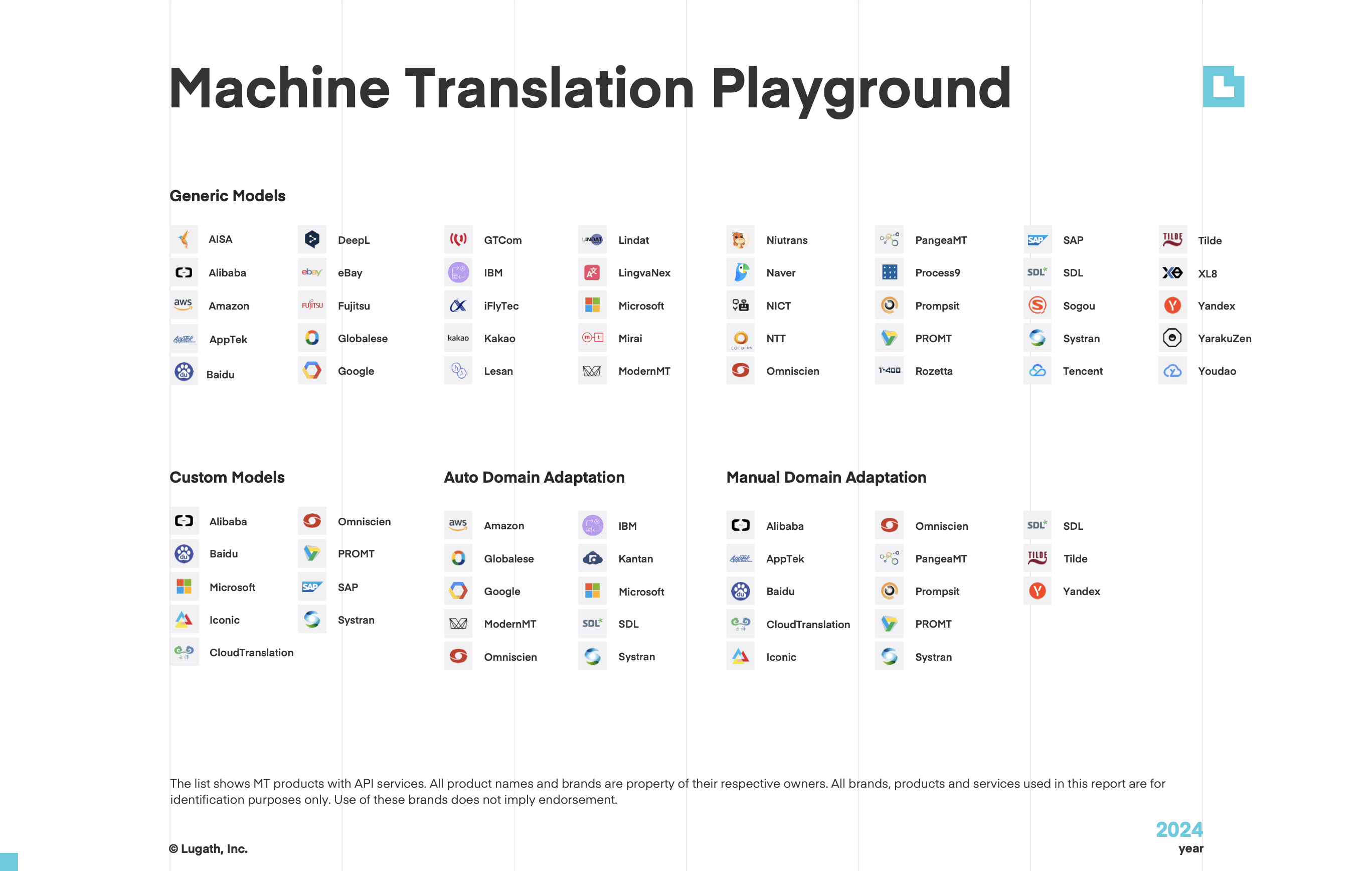

Lugath periodically measures the translation quality of the AI translation services. Most of the algorithms have trade-offs. We are using a combined strategy to evaluate the sentence similarity for detecting the best-performing MT engine.

If you want to learn more, you can download the MT Assessment Report 2024.